Honeycomb integrates with Kubernetes to collect your applications’ logs, cluster logs, and resource metrics so that you can answer all the questions you have.

The Honeycomb Kubernetes agent provides a flexible way to aggregate, structure, and enrich events from applications running on Kubernetes. The Honeycomb Kubernetes agent can also collect resource and status metrics from nodes, pods, containers, and volumes.

To use the Helm package, you will need Helm version 3 or better.

You can still install the agent using kubectl though configuration may be more involved.

You wil also need your Honeycomb API key.

Install using either Helm, or kubectl directly.

To install the agent using the Helm package manager:

helm repo add honeycomb https://honeycombio.github.io/helm-charts

helm install honeycomb honeycomb/honeycomb --set honeycomb.apiKey=YOUR_API_KEY

To install the agent using kubectl:

honeycomb namespace.kubectl create namespace honeycomb

kubectl create secret generic honeycomb --namespace honeycomb \

--from-literal=api-key=YOUR_API_KEY

kubectl apply -f https://raw.githubusercontent.com/honeycombio/honeycomb-kubernetes-agent/main/examples/quickstart.yaml

The agent will collect logs based on configured watchers.

These watchers will match pods based on the configured selection and parse the logs for the pod using the specified parser.

The Helm chart exposes a watchers property that you can use.

If installed using kubectl, you will need to modify the watchers section of the ConfigMap.

A configuration to collect and parse logs from the Kubernetes controller manager and scheduler would look like this:

watchers:

- dataset: kubernetes-logs

labelSelector: component=kube-controller-manager

namespace: kube-system

parser: glog

- <dataset: kubernetes-logs

labelSelector: component=kube-scheduler

namespace: kube-system

parser: glog

An optional configuration is to capture Kubernetes events using the Heptio Eventrouter component.

Configure the Eventrouter to use the json sink for logs, and you can capture them with this watchers configuration:

watchers:

- dataset: k8s-eventrouter

labelSelector: app=eventrouter

namespace: olly

parser: json

processors:

- drop_field:

field: old_event

The agent collects metrics from nodes, pods, containers, and volumes.

For each collection interval, an event for each resource will be sent to Honeycomb that contains all collected metrics as fields to the event.

Kubernetes labels for the resources will be added as fields to the event with a label. prefix.

Pods and containers also get additional status and restart metrics.

The default collection interval is every 10 seconds, for nodes and pods resources only.

Metrics collected depend on the Kubernetes resource type.

You can filter metrics on the k8s.resource.type field.

| metric | node | pod | container | volume |

|---|---|---|---|---|

| metrics.cpu.usage | x | x | x | |

| metrics.cpu.utilization | x | x | x | |

| metrics.filesystem.available | x | x | x | |

| metrics.filesystem.capacity | x | x | x | |

| metrics.filesystem.usage | x | x | x | |

| metrics.memory.available | x | x | x | |

| metrics.memory.major_page_faults | x | x | x | |

| metrics.memory.page_faults | x | x | x | |

| metrics.memory.rss | x | x | x | |

| metrics.memory.usage | x | x | x | |

| metrics.memory.utilization | x | x | x | |

| metrics.memory.working_set | x | x | x | |

| metrics.network.bytes.receive | x | x | ||

| metrics.network.bytes.send | x | x | ||

| metrics.network.errors.receive | x | x | ||

| metrics.network.errors.send | x | x | ||

| metrics.uptime | x | x | x | |

| metrics.volume.available | x | |||

| metrics.volume.capacity | x | |||

| metrics.volume.inodes.free | x | |||

| metrics.volume.inodes.total | x | |||

| metrics.volume.inodes.used | x | |||

| metrics.volume.used | x | |||

| status.exitcode | x | |||

| status.message | x | x | ||

| status.phase | x | |||

| status.ready | x | |||

| status.reason | x | x | ||

| status.restart | x | x | ||

| status.restart_count | x | x | ||

| status.restart_delta | x | x | ||

| status.state | x |

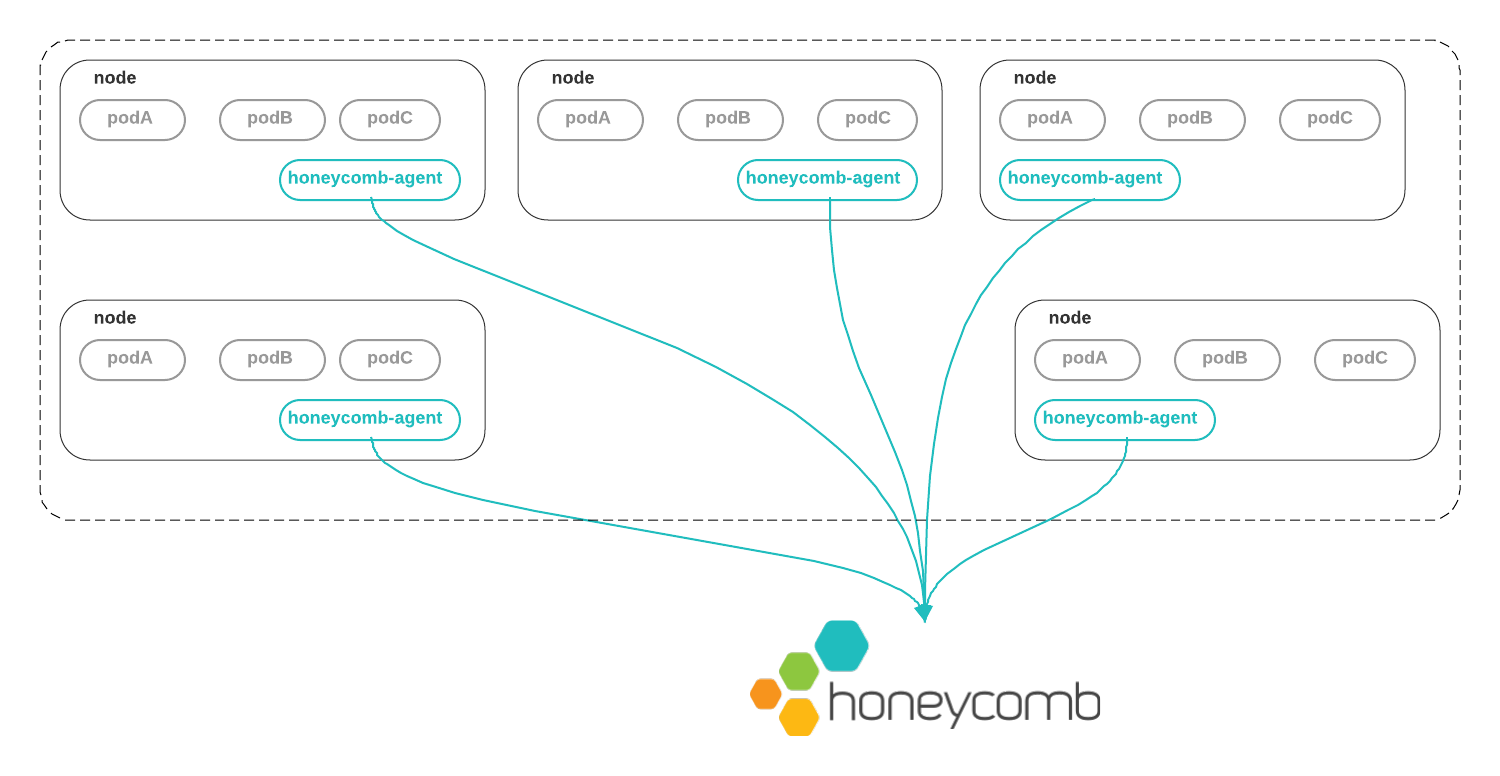

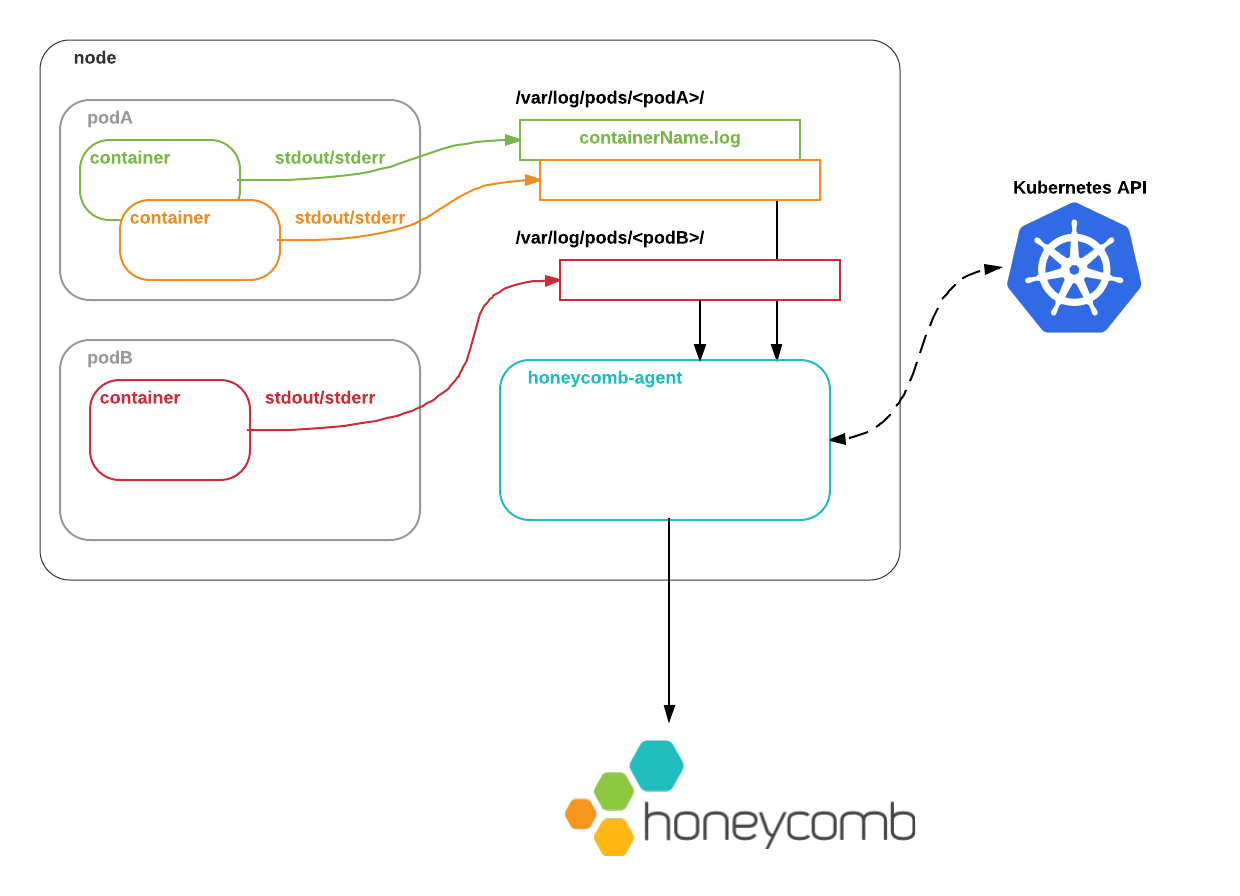

The Honeycomb Kubernetes Agent runs as a DaemonSet. That is, one copy of the agent runs on each node in the cluster.

Metrics for resources running on the node are collected by communicating with the local node’s kubelet directly.

Logs from containers’ stdout and stderr are written by the Docker daemon to the local node filesystem.

The Honeycomb Kubernetes Agent reads these logs, augments them with metadata from the Kubernetes API, and ships them to Honeycomb so that you can observe what is going on.

The Honeycomb Kubernetes Agent’s configuration file describes which pods’ logs to process, and how to handle them, as well as which resource types to collect metrics from.

What metadata does the agent add to these logs?

pod.labelspod.namepod.namespacepod.resourceVersionpod.UIDpod.nodeNamepod.nodeSelectorpod.serviceAccountNamepod.subdomainpod.annotationscontainer.argscontainer.commandcontainer.namecontainer.envcontainer.imagecontainer.portscontainer.VolumeMountscontainer.workingDircontainer.resourcesThe above metadata is added by default.

Node metadata can be added by specifying includeNodeLabels: true within the agent’s metrics configuration.

You can modify the agent’s configuration via the ConfigMap deployed.

By default, Kubernetes resource metrics and logs from the controller and scheduler will be collected.

Applications in Kubernetes tend to use different logging formats. In our opinion, your own applications should use a structured, self-describing log format such as JSON. But Kubernetes system components use the glog format, reverse proxies and ingress controllers may use a combined log format, and so on.

You might also want to aggregate events only from specific services, rather than from everything that might be running in a cluster. Or you might want to send logs from different services to different datasets.

To accommodate these real-world use cases, you can customize the Honeycomb Kubernetes Agent’s behavior with a YAML configuration file.

Ordinarily, you will create this file as a Kubernetes ConfigMap that will be mounted inside the agent container.

Metrics collection can be configured using the metrics configuration property.

metrics:

enabled: true

dataset: kubernetes-metrics

clusterName: MyCluster

metricGroups:

- node

- pod

A clusterName must be specified for each cluster.

By default, only node and pod metrics will be collected.

The following table describes all properties for metrics configuration:

| key | required? | type | description |

|---|---|---|---|

enabled |

yes | bool |

Enables metrics to be collected and sent to Honeycomb. default: false |

dataset |

yes | string |

Name of dataset to sent events to. default: kubernetes-metrics |

clusterName |

yes | string |

Name of Kubernetes cluster. Will be emitted as a field to Honeycomb. default: k8s-cluster |

interval |

no | string |

Collection interval in time duration format, which is specified with a duration suffix. Valid time units are ns, us (or µs), ms, s, m, h. default: 10s |

metricGroups |

no | list |

Resource groups to collected metrics from. Valid values are: node, pod, container, volume. default: node, pod |

omitLabels |

no | list |

Labels in this list will not be collected and sent as fields to Honeycomb. default: nil |

additionalFields |

no | map |

A map of fields name and values to apply to each metric event. default: nil |

includeNodeLabels |

no | bool |

If enabled, attaches node metadata to metric events. Node labels will respect the omitLabels list. |

This configuration will collect metrics from the node, pods, containers, and volumes, every 10 seconds.

The auto generated controller-revision-hash label will be omitted, and additional fields for region and az will be added.

metrics:

enabled: true

dataset: kubernetes-metrics

clusterName: k8s-cluster

interval: 10s

metricGroups:

- node

- pod

- container

- volume

omitLabels:

- controller-revision-hash

additionalFields:

region: us-east

az: us-east-1a

Logs parsing and collection can be configured using the watchers configuration property.

watchers:

- labelSelector: "app=nginx"

parser: nginx

dataset: kubernetes-nginx

- labelSelector: "app=frontend"

parser: json

dataset: kubernetes-frontend

Each block in the watchers list describes a set of pods whose logs you want to handle in a specific way, and has the following keys:

| key | required? | type | description |

|---|---|---|---|

labelSelector |

yes* | string |

A Kubernetes label selector identifying the set of pods to watch. |

parser |

yes | string |

Describes how this watcher should parse events. |

dataset |

yes | string |

The dataset that this watcher should send events to. |

containerName |

no | string |

If you only want to consume logs from one container in a multi-container pod, the name of the container to watch. |

processors |

no | list |

A list of processors to apply to events after they are parsed |

namespace |

no | string |

The Kubernetes namespace the pods are located in. If not supplied, default namespace is used. |

paths |

no | string array |

Glob-style* paths to the log files. If not supplied, the default Kubernetes log paths and filenames are used. |

exclude |

no | string array |

Glob-style* paths for files to exclude from consideration. If a given file matches an exclude, it will not be watched. If not supplied, no files are excluded. |

“Glob-style” means:

* matches any sequence of non-path-separators./**/ matches zero or more directories.? matches any single non-path-separator character.To check a configuration file without needing to deploy it into the cluster, you can run the Honeycomb Kubernetes Agent container locally with the --validate flag:

docker run -v /FULL/PATH/TO/YOUR/config.yaml:/etc/honeycomb/config.yaml honeycombio/honeycomb-kubernetes-agent:head --validate

To make a configuration file visible to the Honeycomb Kubernetes Agent inside a Kubernetes cluster, you will need to create a Kubernetes ConfigMap from it.

To create a brand-new ConfigMap from a local file config.yaml, run:

kubectl create configmap honeycomb-agent-config --from-file=config.yaml

To replace an existing ConfigMap, you can run:

kubectl create configmap honeycomb-agent-config \

--from-file=config.yaml --output=yaml \

--dry-run | kubectl replace --filename=-

Then restart running agent pods:

kubectl delete pod --selector k8s-app=honeycomb-agent

Currently, the following parsers are supported:

Does no parsing on logs, and submits an event with the entire contents of the log line in a "log" field, plus the aforementioned kubernetes metadata.

Use this if you just want the “raw” log line, or if your log line structure does not match one of the parsers below.

You can still query datasets with raw log lines to some degree using string filters and calculated fields, but structuring your logs is strongly encouraged.

JSON is a great format for structured logs. With the JSON parser, we map JSON key/value pairs to event fields.

Parses NGINX access logs.

If you are using a custom NGINX log format, you can specify the format using the following configuration:

watchers:

- labelSelector: "io.kompose.service=nginx"

parser:

name: nginx

dataset: nginx-inner

options:

log_format: '$remote_addr - $remote_user [$time_local] $host "$request" $status $bytes_sent $body_bytes_sent $request_time "$http_referer" "$http_user_agent" $request_length "$http_authorization" "$http_x_forwarded_proto" "$http_x_forwarded_for" $server_name'

Parses logs produced by glog, which look like this:

I0719 23:09:54.422170 1 kube.go:118] Node controller sync successful

This format is commonly used by Kubernetes system components, such as the API server.

Parses logs produced by redis 3.0+, which look like this:

1:M 08 Aug 22:59:58.739 * Background saving started by pid 43

Thanks to MacRae Linton for contributing the Redis parser.

Parses logs in key=value format, such as:

time=2022-05-15T05:43:19Z msg="server response - time 12ms code: 401 - request: GET /hello ..."

Key-value formatted logs often have a special prefix, such as a log level.

INFO: time=2022-05-15T05:43:19Z msg="server response - time 12ms code: 401 - request: GET /hello ..."

For parsing lines that have additional fields without an equal sign, and to ensure fields are extracted properly into fields in the Honeycomb UI, specify a regular expression to parse that prefix in the configuration.

watchers:

- labelSelector: "com.myco.logging.keyvalformat=true"

parser:

name: keyval

options:

prefixRegex: "(?P<loglevel>[A-Z]+): (?P<timestamp>time=[0-9]{4}-[0-9]{2}-[0-9]{2}T[0-9]{2}:[0-9]{2}:[0-9]{2}Z )"

If whitespace-separated text is found that does not contain =, then it will be interpreted as a key without a value.

For example, if a log contains text:

This is a test

Then, the keyval parser will create an event that looks similar to:

{

"This": true,

"is": true,

"a": true,

"test": true

}

This will cause an explosion of columns in Honeycomb.

As this is a deliberate choice by the keyval parser, extra care should be taken when using it.

Processors transform events after they are parsed. Currently, the following processors are supported:

The additional_fields processor accepts a static map of field names and values and appends those to every event it processes.

These values will overwrite existing fields of the same name, if they exist.

For example, with the following configuration:

processors:

- additional_fields:

environment: production

owner: me@example.com

The field’s environment and owner will be added to the event.

The sample processor will only send a subset of events to Honeycomb.

Honeycomb natively supports sampled event streams, allowing you to send a representative subset of events while still getting high-fidelity query results.

Options:

| key | type | description |

|---|---|---|

type |

"static" or "dynamic" |

How events should be sampled. |

rate |

integer |

The rate at which to sample events. Specifying a sample rate of 20 will cause one in 20 events to be sent. |

keys |

list of strings |

The list of field keys to use when doing dynamic sampling. |

windowsize |

integer |

How often to refresh estimated sample rates during dynamic sampling, in seconds. Default: 30s. |

minEventsPerSec |

integer |

Whenever the number of events per second being processed falls below this value for a time window (see windowSize), sampling will be disabled for the next time window (all events will be sent with a sample rate of 1). Default: 50. The minimum possible value is 1. |

The drop_field processor will remove the specified field from all events before sending them to Honeycomb.

This is useful for removing sensitive information from events.

Options:

| key | value | description |

|---|---|---|

field |

string |

The name of the field to drop. |

The request_shape processor will take a field representing an HTTP request, such as GET /api/v1/users?id=22 HTTP/1.1, and unpack it into its constituent parts.

Options:

| key | value | description |

|---|---|---|

field |

string |

The name of the field containing the HTTP request (for example, "request") |

patterns |

list of strings |

A list of URL patterns to match when unpacking the request |

queryKeys |

list of strings |

An allowlist of keys in the URL query string to unpack |

prefix |

string |

A prefix to prepend to the unpacked field names |

For example, with the following configuration:

processors:

- request_shape:

field: request

patterns:

- /api/:version/:resource

queryKeys:

- id

the request_shape processor will expand the event

{"request": "GET /api/v1/users?id=22 HTTP/1.1", ...}

into

{

"request": "GET /api/v1/users?id=22 HTTP/1.1",

"request_method": "GET",

"request_protocol_version": "HTTP/1.1",

"request_uri": "/api/v1/users?id=22",

"request_path": "/api/v1/users",

"request_query": "id=22",

"request_shape": "/api/:version/:resource?id=?",

"request_path_version": "v1",

"request_path_resource": "users",

"request_pathshape": "/api/:version/:resource",

"request_queryshape": "id=?",

"request_query_id": "22",

...

}

The timefield processor will replace the default timestamp in an event with one extracted from a specific field in the event.

Options:

| key | value | description |

|---|---|---|

field |

string |

The name of the field containing the timestamp |

format |

string |

The format of the timestamp found in timefield, in strftime or Golang format |

Note: This processor is not generally necessary when collecting pod logs. The Honeycomb Kubernetes Agent will automatically use the timestamp recorded by the Docker json-log driver. It is useful when parsing logs that live at a particular path on the node filesystem, such as Kubernetes audit logs.

Here are some example Kubernetes Honeycomb Agent configurations.

Parse logs from pods labelled with app: nginx:

---

writekey: "YOUR_API_KEY"

watchers:

- labelSelector: app=nginx

parser: nginx

dataset: nginx-kubernetes

processors:

- request_shape:

field: request

Send logs from different services to different datasets:

---

writekey: "YOUR_API_KEY"

watchers:

- labelSelector: "app=nginx"

parser: nginx

dataset: nginx-kubernetes

- labelSelector: "app=frontend-web"

parser: json

dataset: frontend

Sample events from a frontend-web deployment: only send one in 20 events from the prod namespace, and one in 10 events from the staging namespace.

---

writekey: "YOUR_API_KEY"

watchers:

- labelSelector: "app=frontend-web"

namespace: prod

parser: json

dataset: frontend

processors:

- sample:

type: static

rate: 20

- drop_field:

field: user_email

- labelSelector: "app=frontend-web"

namespace: staging

parser: json

dataset: frontend

processors:

- sample:

type: static

rate: 10

Get logs from a multi-container pod, but only from the sidecar container:

---

writekey: "YOUR_API_KEY"

watchers:

- labelSelector: "app=frontend-web"

containerName: sidecar

parser: json

dataset: frontend