A self-service migration from Classic Datasets to Environments is available to all teams. When migrating, data being sent to Honeycomb will change its destination from the dataset(s) in Classic to the new Environment(s).

For Enterprise teams, migration assistance exists. Contact your Honeycomb Success Representative for more details.

Before proceeding, complete the Migration Preparation checklist, and ensure an easier migration experience.

The migration process includes the following tasks:

Post-Migration:

First, create a new Environment in Honeycomb.

You must be a team owner in order to create an Environment. A new Environment creates a new API Key by default. After creating a new Environment, additional API Keys may be created according to best practices. In a later step, use this API Key to update your instrumentation and to tell Honeycomb to send the data to this new Environment.

To create a new Environment in Honeycomb:

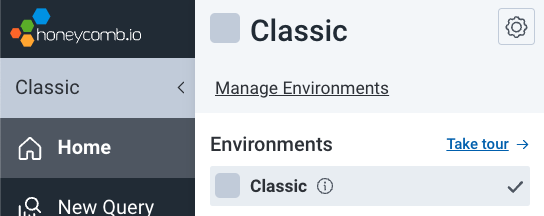

Select the label below the Honeycomb logo in the left navigation menu to reveal the Environments list.

When working within Honeycomb Classic, a Classic label with a gray background appears.

Select Manage Environments. The Environments summary appears.

Select Create Environment in the top right corner. A modal appears.

Enter a name (required) for the environment. Optionally, enter a description for the Environment and choose a representative color from the dropdown.

Select Create Environment and the new Environment will appear in the Environments summary.

Select View API Keys in your new Environment’s row. The Environment’s API Keys appears in list form.

Use the Copy icon to copy the API Key for use in your instrumentation.

send events and create datasets permissions to send events and create new datasets from traces.At this point of the migration, ensure each tracing instrumentation library is updated to use the minimum version, or ideally the latest version, that supports Environments and Services. We recommend updating to the latest version to enjoy a library’s full benefits and features. Reference the instrumentation version updates list from your migration preparation.

If you use Refinery, first complete the steps in the Refinery Migration section before continuing.

Otherwise, proceed to the next step.

In your instrumentation, update each Honeycomb API Keys with an API Key from your new Environment. Changing your API Keys causes data flow into your new Environment and the automatic creation of new datasets.

Any reference to the Honeycomb API needs an updated API key.

Trace data is linked to an Environment and is identified implicitly by the API key used. For Service datasets, specifying a Dataset name is no longer required to submit trace data.

Any General dataset still requires a Dataset name to submit data. General datasets includes logs and metrics and should be previously identified in your migration preparation.

After changing your API Keys, Honeycomb should show:

service.nameReference the Dataset and Services lists from your migration preparation to ensure all expected Datasets are created.

Use Service Map to determine if Services appear as expected in their new Environment.

Recreate the configuration for each Honeycomb feature using one of the following options:

If manually recreating, we recommend recreating each Honeycomb feature in the following order:

To ensure a complete migration, use the “Honeycomb Features and Configurations” list from your migration preparation as reference.

After migration, review your Environment and configurations for any errors.

For new Honeycomb configurations that are service-specific, remove any unnecessary service name references (service.name).

If no longer needed, delete your Classic Environment using Delete Environments in Environment Settings. You must be a team owner to delete an environment.

You may want to keep your Classic Environment if:

Note that this Classic data will age out based on your retention period. We encourage the deletion of your Classic Environment once finished with it.

Join the #discuss-hny-classic channel in our Pollinators Community Slack to ask questions and learn more.

For Pro and Enterprise users, contact Support via support.honeycomb.io, or email at support@honeycomb.io.

To migrate Refinery from Classic to Environments:

rules.toml to support Environments as neededconfig.toml to use the new Environment API Key(s) and Environment name(s)EnvironmentCacheTTL, an optional Refinery configuration option, to config.tomlUpdate your Refinery instrumentation to a version that supports Environments and Services.

We recommend updating to the latest available version, but the minimum required version for Refinery is version 1.12.0.

As needed, update your Refinery Sampling Rules in rules.toml to include Environment Sampling Rules.

Consider updating if your existing Sampling Rules needs to specify sampling based on service name. In a situation where the Dataset name matches the new Environment name, an update may not be needed.

Good news! Refinery Sampling Rules can coexist with the same name for Environments and Classic Datasets. Otherwise, Refinery migration will require running two Refinery clusters, which is not ideal. Use coexisting Sampling rules to route yet-to-be-migrated data to Honeycomb Classic and to send migrated services to Environments.

To enable Classic Dataset and Environment coexistence, set a DatasetPrefix in the config.toml configuration.

When Refinery receives telemetry using a Classic Dataset API key, it uses the DatasetPrefix to resolve rules using the format {prefix}.{dataset}.

Set the dataset prefix (DatasetPrefix) in config.toml.

For example:

DatasetPrefix = "classic"

Update your Classic datasets in rules.toml with the DatasetPrefix value.

For example, these sampling rules define the Environment “Hello world”, the Environment “Production”, and a “production” dataset in Honeycomb Classic.

Note that the “production” Honeycomb Classic Dataset is configured as [classic.production].

# default rules

Sampler = "DeterministicSampler"

SampleRate = 1

['Hello world'] # environment, wrap with '' if name contains white space

Sampler = "DeterministicSampler"

SampleRate = 10

[production] # environment

Sampler = "DeterministicSampler"

SampleRate = 20

[classic.production] # classic dataset

Sampler = "DeterministicSampler"

SampleRate = 30

With Environments, Refinery now supports the ability to determine if an API key is a Classic Dataset key (32 characters) or an Environment Key (22/23 characters) when receiving telemetry data.

For Classic Dataset keys, Refinery follows the pre-existing behavior and uses the Dataset present in the event to reference the sampler definition.

For Environment keys, Refinery uses the API key to call to Honeycomb API and retrieve the Environment name, which is cached.

Then, Refinery uses that value to look up the sampler definition.

(Change how long Refinery caches the Environment name value with EnvironmentCacheTTL.)

Evaluate and update your Refinery’s General configuration with:

APIKeys if not accepting all API KeysDatasetPrefix if an Environment and a Classic Dataset use the same nameEnvironmentCacheTTL if change is neededEnvironmentCacheTTL is a new optional Refinery configuration option that exists for Environments users.

When given an Environment API key, Refinery looks up the associated environment through an HTTP call home to Honeycomb.

The EnvironmentCacheTTL configuration controls the amount of time that the retrieved environment name is cached.

The default is 1 hour (“1h”) and is not eligible for live reload.

To cache for a different length of time than the default 1 hour, set the EnvironmentCacheTTL in config.toml:

EnvironmentCacheTTL = "2h"

At this point, your Refinery instance should have:

Once your Refinery update is complete, send your Honeycomb data to an Environment.

You may find that the Refinery Rules configuration need adjustment after data is sent to an Environment. Modify the rules as necessary.