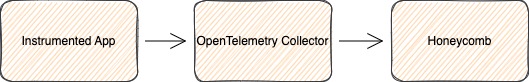

The OpenTelemetry Collector offers a vendor-agnostic way to gather observability data from a variety of instrumentation solutions and send that data to Honeycomb. Applications instrumented with OpenTelemetry SDKs or with Jaeger or Zipkin can use the OpenTelemetry Collector to send trace data to Honeycomb as events. Additionally, applications instrumented with OpenTelemetry SDKs or with metrics data from Prometheus, StatsD, Influx, and others can use the OpenTelemetry Collector to send metrics data to Honeycomb.

A Collector can be used with any number of applications, and by default all OpenTelemetry SDK’s export telemetry to the Collector OTLP endpoint. Using a Collector allows for experimenting and setting up new instrumentation, as well as exporting to multiple backends that may include Honeycomb or even another Collector. A Collector can also help by offloading data and processing telemetry before it is sent on, using various processors for batching, transforming, and filtering data.

Honeycomb supports receiving telemetry data via OpenTelemetry’s native protocol, OTLP, over gRPC, HTTP/protobuf, and HTTP/JSON. The minimum supported versions of OTLP protobuf definitions are 1.0 for traces, metrics, and logs.

This means you can use the OpenTelemetry Collector and its standard OTLP exporter to send data to Honeycomb without any additional exporters or plugins.

To send trace data to Honeycomb, configure an OTLP exporter with the Honeycomb API Key as a header, and include the exporter in the relevant pipeline:

exporters:

otlp:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

The Collector consists of three components: receivers, processors, and exporters, which are then used to construct telemetry pipelines.

To configure the components for the Collector, create a file called otel-collector-config.yaml.

Add receivers for OTLP trace output from applications instrumented with OpenTelemetry:

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

Include a batch processor to batch telemetry before sending to Honeycomb:

processors:

batch:

Configure an OTLP exporter with the Honeycomb API Key as a header:

exporters:

otlp:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

Finally, configure a service pipeline for traces:

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

The final result of adding receivers, processors, exporters, and pipelines will look like this:

# otel-collector-config.yaml

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

processors:

batch:

exporters:

otlp:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

If you are a Honeycomb Classic user, the Dataset also must be specified using the x-honeycomb-dataset header in the exporters section, in the line below the x-honeycomb-team.

A Dataset is a bucket where data gets stored in Honeycomb.

exporters:

otlp:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

"x-honeycomb-dataset": "YOUR_DATASET"

The Collector can handle OTLP metrics and logs signals in addition to traces.

Metrics require a dataset in the exporter.

Logs will use the service.name associated with the trace from which it originated, or a dataset if provided.

It is possible to send these to the same or to multiple places in Honeycomb.

To set a different pipeline with the same type of exporter, append the exporter with /my-pipeline.

The following example exports OTLP application metrics to a specified dataset, and exports logs to the same place as traces.

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

processors:

batch:

exporters:

otlp:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

otlp/metrics:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

"x-honeycomb-dataset": "YOUR_METRICS_DATASET"

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp/metrics]

logs:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

To receive browser telemetry, the Collector requires an enabled OTLP/HTTP receiver.

The allowed_origins property is required to enable Cross-Origin Resource Sharing (CORS) from the browser to the collector.

The Collector will need to be accessible by the browser.

It is recommended to put an external load balancer in front of the collector, which will also need to be configured to accept requests from the browser origin.

You can also configure the Collector to symbolicate your JavaScript stack traces. This translates obfuscated or minified names from your stack traces into human-readable formats.

In the example below, the configuration allows for the OpenTelemetry Collector to accept browser OpenTelemetry tracing, and is required to get data from the browser to Honeycomb.

receivers:

otlp:

protocols:

http:

endpoint: 0.0.0.0:4318

cors:

allowed_origins:

- "http://*.<yourdomain>.com"

- "https://*.<yourdomain>.com"

processors:

batch:

exporters:

otlp:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

More configuration options can be found on the Collector Github Repository.

If you are a Honeycomb Classic user, the Dataset also must be specified using the x-honeycomb-dataset header in the exporters section, in the line below the x-honeycomb-team.

A Dataset is a bucket where data gets stored in Honeycomb.

exporters:

otlp:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

"x-honeycomb-dataset": "YOUR_DATASET"

Find out more about setting up browser instrumentation.

Honeycomb provides several Collector processors for symbolicating stack traces from your browser, iOS, Android, and React Native applications. This gives you human-readable errors and stack traces in your frontend launchpad’s Errors UI.

For tutorials on setting up these processors, check out:

The Collector can receive telemetry from different applications in different formats, and also export to multiple backends.

The following is a complete configuration file example for a Collector instance that accepts Jaeger and OpenTelemetry (over gRPC and HTTP) trace data, as well as Prometheus metrics. This example includes the batch processor and several extensions, and exports both trace and metric data to Honeycomb:

receivers:

jaeger:

protocols:

thrift_http:

endpoint: "0.0.0.0:14268"

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

prometheus:

config:

scrape_configs:

- job_name: "prometheus"

scrape_interval: 15s

static_configs:

- targets: ["0.0.0.0:9100"]

processors:

batch:

exporters:

otlp:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

otlp/metrics:

endpoint: "api.honeycomb.io:443" # US instance

#endpoint: "api.eu1.honeycomb.io:443" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

"x-honeycomb-dataset": "YOUR_METRICS_DATASET"

extensions:

health_check:

endpoint: 0.0.0.0:13133

pprof:

zpages:

service:

extensions: [health_check, pprof, zpages]

pipelines:

traces:

receivers: [jaeger, otlp]

processors: [batch]

exporters: [otlp]

metrics:

receivers: [prometheus]

processors: []

exporters: [otlp/metrics]

See the Collector documentation for more configuration options.

The otlp exporter uses the gRPC protocol to send telemetry data.

To use HTTP/protobuf instead of gRPC, use the otlphttp exporter, update the endpoint, and update the pipeline:

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

otlphttp:

endpoint: "https://api.honeycomb.io" # US instance

#endpoint: "https://api.eu1.honeycomb.io" # EU instance

headers:

"x-honeycomb-team": "YOUR_API_KEY"

service:

pipelines:

traces:

receivers: [otlp]

processors: []

exporters: [otlphttp]

You can run the Collector in Docker to try it out locally. This is needed when adding instrumentation in development to send events to Honeycomb.

For instance, if your configuration file is called otel_collector_config.yaml in the current working directory, the following command will run the Collector with open ports for sending OTLP protocol:

docker run \

-p 14268:14268 \

-p 4317-4318:4317-4318 \

-v $(pwd)/otel_collector_config.yaml:/etc/otelcol-contrib/config.yaml \

ghcr.io/open-telemetry/opentelemetry-collector-releases/opentelemetry-collector-contrib:latest

When using the core collector image, which includes a more limited set of components, the configuration and image files will be slightly different:

docker run \

-p 14268:14268 \

-p 4317-4318:4317-4318 \

-v $(pwd)/otel_collector_config.yaml:/etc/otelcol/config.yaml \

ghcr.io/open-telemetry/opentelemetry-collector-releases/opentelemetry-collector:latest

More details on running the Collector can be found in its documentation.

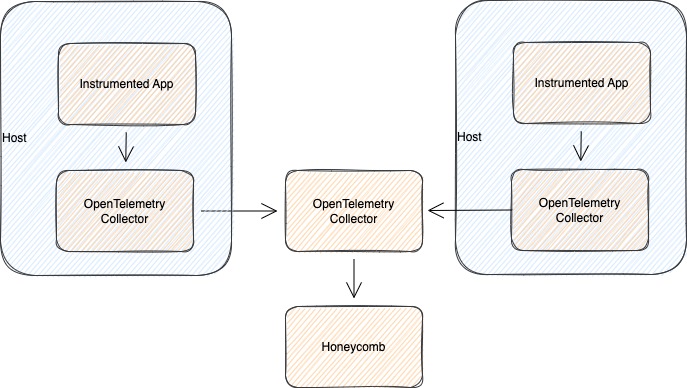

There are various deployment patterns that can be considered for different use cases. We cover the two most common deployment patterns here.

A Collector running in Gateway mode is a Collector instance running as a standalone service (for example, container or deployment), typically per cluster, data center, or region.

You can then export telemetry data from this collector instance to one or more backends like Honeycomb.

Deploy the Collector in Gateway Mode when:

clustermetrics receiver to collect cluster-level metricsYou can scale the collector in gateway mode horizontally or vertically by running several instances of the collector behind a load balancer.

A Collector instance running in agent mode runs alongside the application or on the same host as the application. For example, binary, sidecar, or daemonset.

Telemetry data from the collector instance (or multiple instances) can then be sent to a central collector to export to Honeycomb or directly to Honeycomb.

Deploy the Collector in Agent Mode when:

k8sattributes processor, which adds metadata attributes to spans, metrics, and logs. These attributes can make it easier to correlate data in Honeycombhostmetrics scraper to collect host metricsReview the Collector Deployment documentation for more details on deployment options.

If using version v0.66.0 (or higher) of the OpenTelemetry Collector-Contrib distribution, use the filterprocessor to filter Span Events or any other data before sending it to Honeycomb.

The filterprocessor component can be especially helpful if using instrumentations that create a lot of noisy, unneeded data.

To use, add the filterprocessor component as a processor in your OpenTelemetry Collector configuration file, such as otel-collector-config.yaml.

Configure your OpenTelemetry Collector to filter Span Events, similar to:

processors:

# add the filterprocessor

filter:

# tell it to operate on span data

traces:

# Filter out span events with the 'grpc' attribute,

# or have a span event name with 'grpc' in it.

spanevent:

- 'attributes["grpc"] == true'

- 'IsMatch(name, ".*grpc.*") == true'

Note that each individual line in the spanevent list is a separate filter.

If any of the filters match, the span event will be filtered out.

In other words, each line is an OR condition.

Additionally, Honeycomb currently translates all fields with the instrumentation_scope.name field into library.name.

To filter based on the value of an instrumentation scope, use instrumentation_scope.name instead of library.name.

To require multiple conditions to be true, write a single filter that combines them, similar to:

processors:

filter:

traces:

# Filter out only span events with both the 'grpc' attribute and

# that have a span event name with 'grpc' in it.

spanevent:

- 'attributes["grpc"] == true and IsMatch(name, ".*grpc.*") == true'

You can filter any OpenTelemetry signals, not only Span Events. This example filters data across spans, metrics, and logs:

processors:

filter:

traces:

span:

- 'attributes["container.name"] == "app_container_1"'

- 'resource.attributes["host.name"] == "localhost"'

- 'name == "app_3"'

spanevent:

- 'attributes["grpc"] == true'

- 'IsMatch(name, ".*grpc.*") == true'

metrics:

metric:

- 'name == "my.metric" and attributes["my_label"] == "abc123"'

- 'type == METRIC_DATA_TYPE_HISTOGRAM'

datapoint:

- 'metric.type == METRIC_DATA_TYPE_SUMMARY'

- 'resource.attributes["service.name"] == "my_service_name"'

logs:

log_record:

- 'IsMatch(body, ".*password.*") == true'

- 'severity_number < SEVERITY_NUMBER_WARN'

The filtering language is done with the OpenTelemetry Transformation Language (OTTL). Learn more about the OTTL Processor.

Sometimes you want to make sure that certain information does not escape your application or service. This could be for regulatory reasons regarding personally identifiable information, or you want to ensure that certain information does not end being stored with a vendor. Scrubbing attributes from a span is possible with the built-in Attributes Span Processor. Span processors in OpenTelemetry allow you to hook into the lifecycle of a span and modify the span contents before sending to a backend.

The Attributes Span Processor can be configured with actions, and specifically the delete action can be used to remove span attributes.

There are other actions that could be used, such as upsert to replace the value or hash to encrypt the value with a SHA1 hash.

processors:

attributes:

actions:

- key: my.sensitive.data

action: delete

If request sizes are too large, there will be an error when trying to send to Honeycomb. The request size limit is 15MB. To help mitigate errors for requests being too large, it is recommended to set a limit on the batch size and use compression when exporting to Honeycomb.

A Batch Processor has a configuration option for send_batch_size and send_batch_max_size.

These options specify the number of data points, regardless of size, to include in a batch to be sent.

A one-size-fits-all value does not exist for each of these, since different requests will vary in size.

However, these are worth tuning to find the right limit to ensure data is being sent reliably.

Collector exporters have configuration options to enable compression, including for both gRPC and HTTP.

Current supported compression types include gzip, snappy, and zstd.

Because Honeycomb supports OTLP, the old Honeycomb-specific exporter is no longer required and is no longer available in current versions of the Collector. The built-in OTLP exporter should be used instead to send trace data to Honeycomb.

Most OpenTelemetry SDKs have an option to export telemetry as OTLP either over gRPC or HTTP/protobuf, with some also offering HTTP/JSON. If you are trying to choose between gRPC and HTTP, keep in mind:

gRPC default export uses port 4317, whereas HTTP default export uses port 4318.

To explore common issues when sending data, visit Common Issues with Sending Data in Honeycomb.