The Burn Alerts feature provides notifications related to your SLO Budget. Burn Alerts can notify you when issues impact your SLO budget, which represents the maximum allocation of failures for your service. Configured alerts let you react to the incidents that matter most to you as defined in your SLO.

Use cases for Burn Alerts include, but are not limited to:

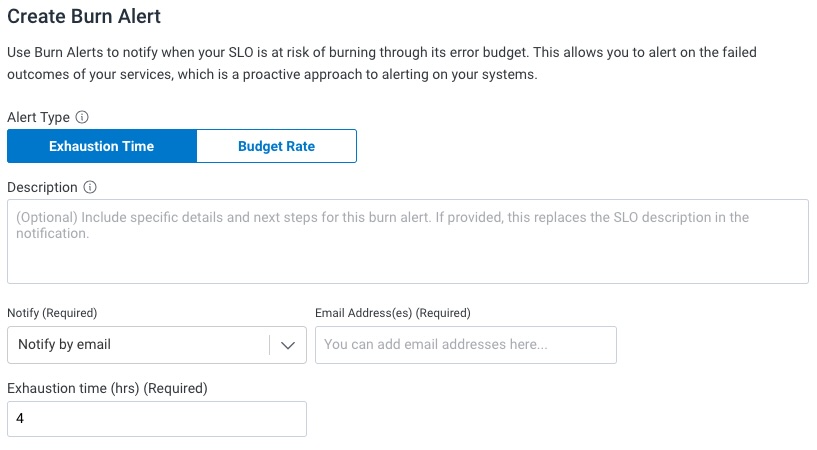

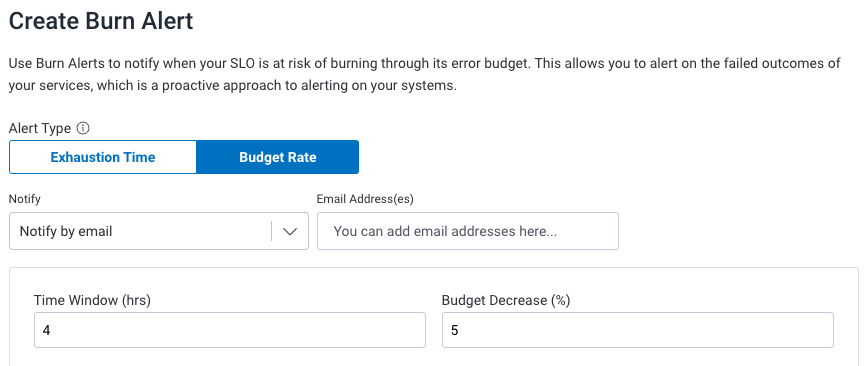

When creating a Burn Alert, choose from the following Burn Alert types:

| Exhaustion Time | Budget Rate | |

|---|---|---|

| Description | Notifies when your SLO is at risk of burning through its error budget within a specified number of hours. This allows for proactive steps before the SLO budget reaches zero. | Notifies when the SLO budget drops by a minimum specified percentage within a defined time window. This allows for the detection of budget burn issues and unexpected spikes in a timely manner. |

| Parameters | Exhaustion Time (hours) | Time Window (hours), Budget Decrease (%) |

| Example Alert | Alert me when I am about to run out of budget in 24 hours. | Alert me when the SLO budget decreases by 10% in the last 2 hours. |

| Signal of the Alert | Alert when you are x hours away from violating your SLO. | Alert when the SLO budget starts to rapidly burn or inconsistently burn. |

To add a Burn Alert:

where:

Description adds context, such as runbook links or alert summaries for the Burn Alert. When utilized, the Burn Alert description appears in the notification instead of the SLO description.

Notify configures the notification option for the Burn Alert.

Exhaustion time (hours) is when you want to be notified based on how much time (in hours) is left until your projected SLO budget will hit zero.

0.25 corresponds to 15 minutes–usually that is not enough time to make the SLO actionable. Conversely, periods of more than a few days almost never merits notification as it effectively acts the same as the current SLO’s time period.Learn about Best Practices for Exhaustion Time burn alerts.

1. Maximum value is the length of your SLO.0.0001. Maximum value is 100.A Budget Burndown graph also appears, which projects potential alert frequencies based on input values.

Learn about Best Practices for Budget Rate burn alerts.

Notify by email appears as the default notification method, which requires entering one or more email addresses. Enter multiple emails separated by commas.

Additional integration options, like Slack and PagerDuty, are populated from SLO and Trigger Recipients, as found under Team Settings > Integrations. Once configured, these additional options can be selected.

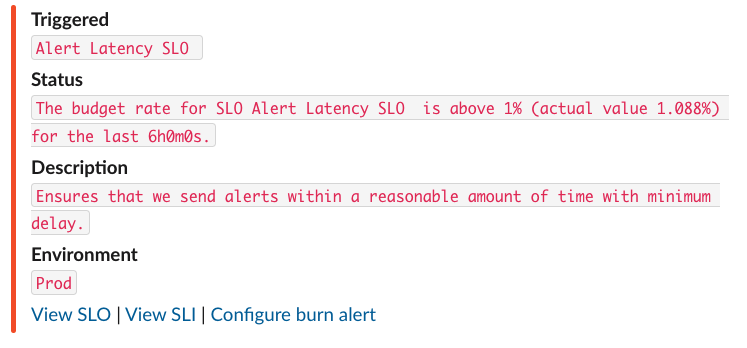

For example, a Budget Rate burn alert in Slack appears similar to:

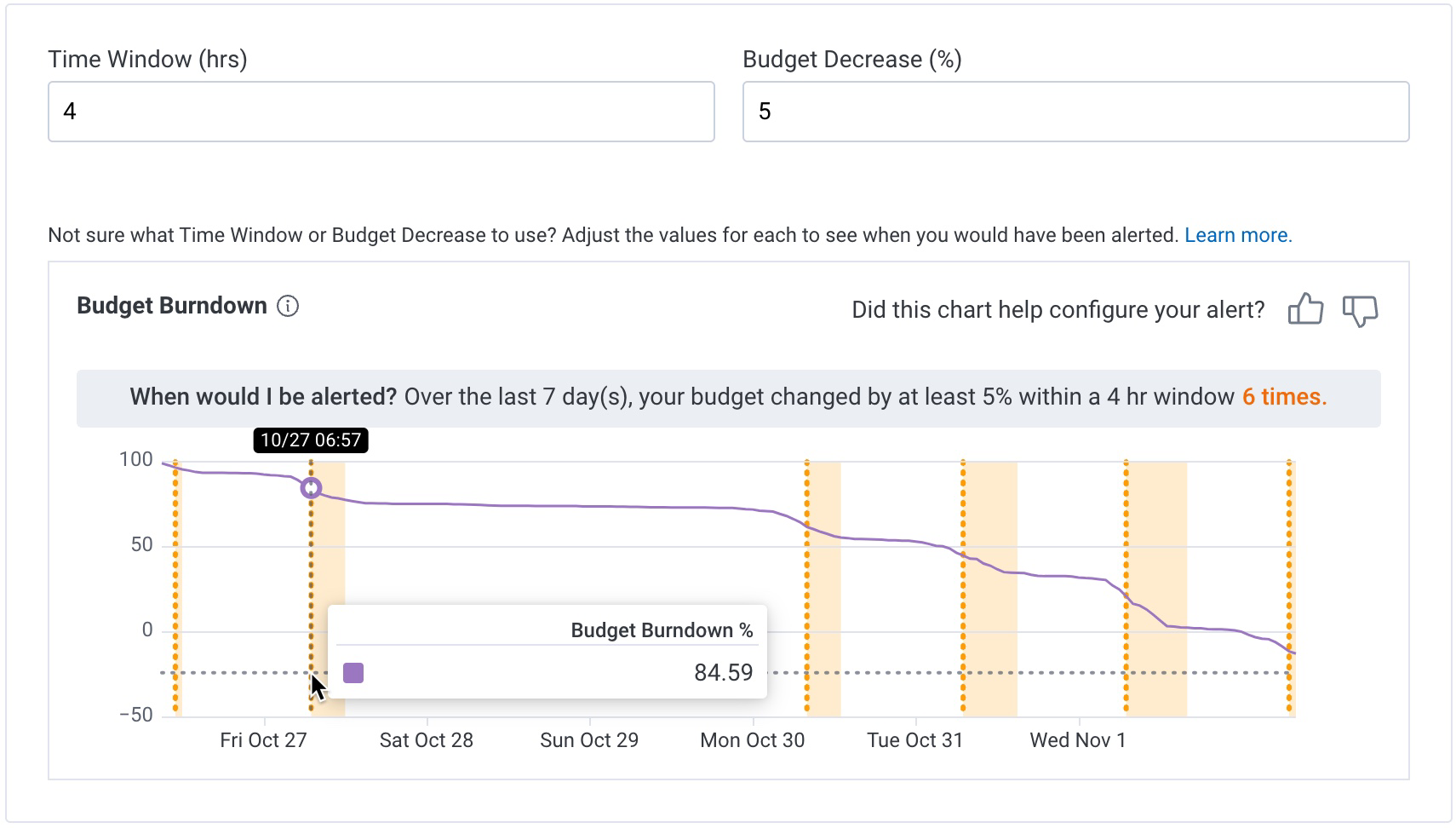

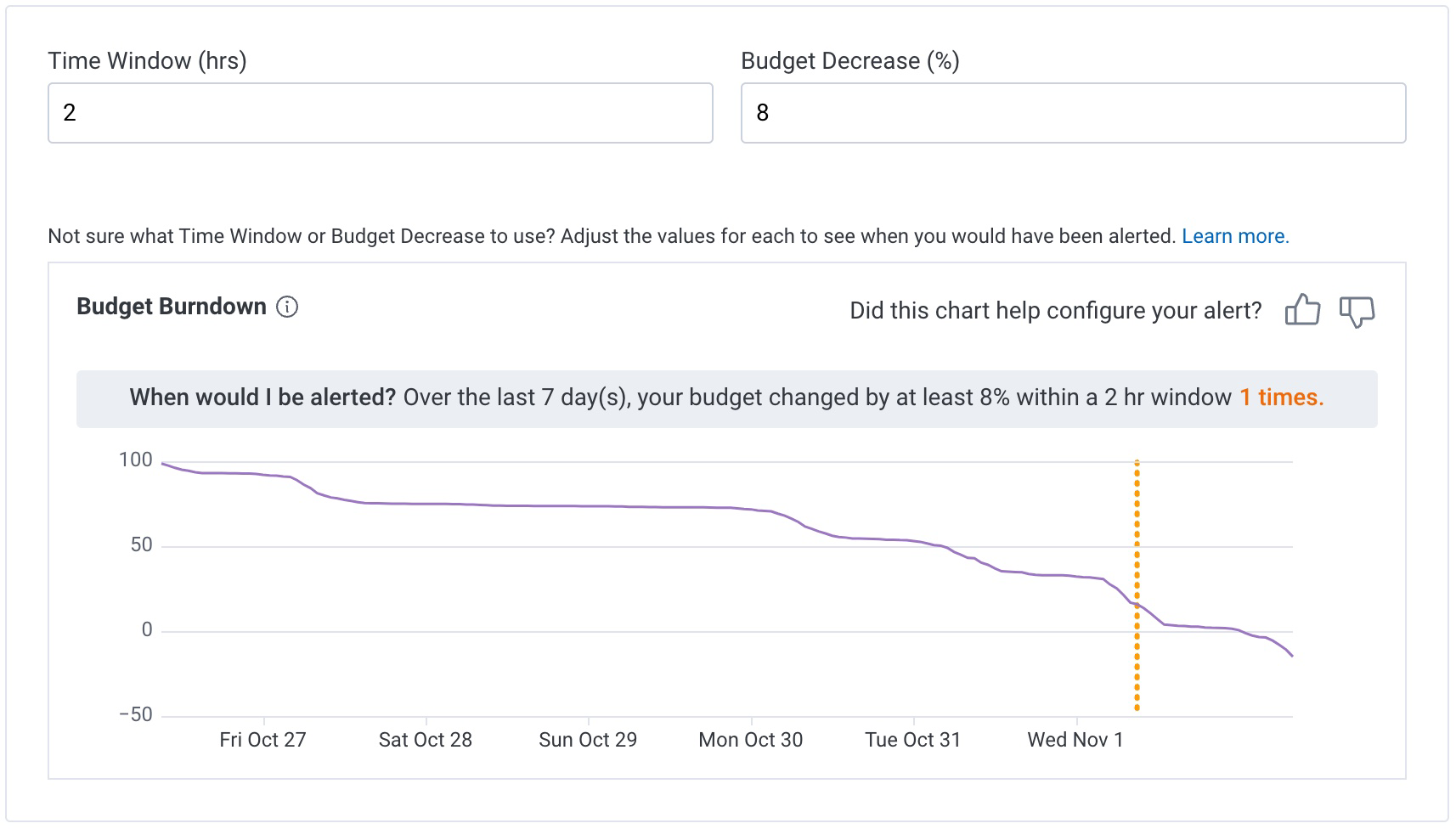

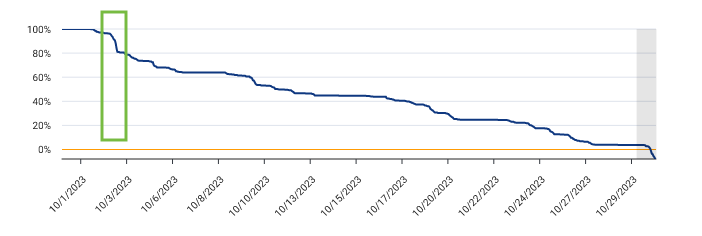

When creating a Budget Rate burn alert, the Budget Burndown graph appears. Use the Budget Burndown graph to determine the Time Window and Budget Decrease values that work best.

The graph shows the Budget Burndown over the SLO’s time period. Change the values for Time Window and/or Budget Decrease to see different graph projections. The dashed line markers appear on the graph to represent when alerts would have been sent. The light orange range represents how long an alert would remain activated.

In this example below, the SLO’s time period is 7 days.

A 4-hour Time Window and a 5% Budget Decrease would cause alerts to occur 6 times.

Hovering over the marker for the second alert reveals its estimated notification date is at 6:57am on October 27.

You may decide that a 5% decrease alerts too often and that amount of burn over the 4-hour window is not serious enough to alert the team.

Further experimentation may find that a 2-hour Time Window and an 8% Budget Decrease is perfect for your team.

Entering these values shows a graph with the next estimated alert notification(s), based on these values.

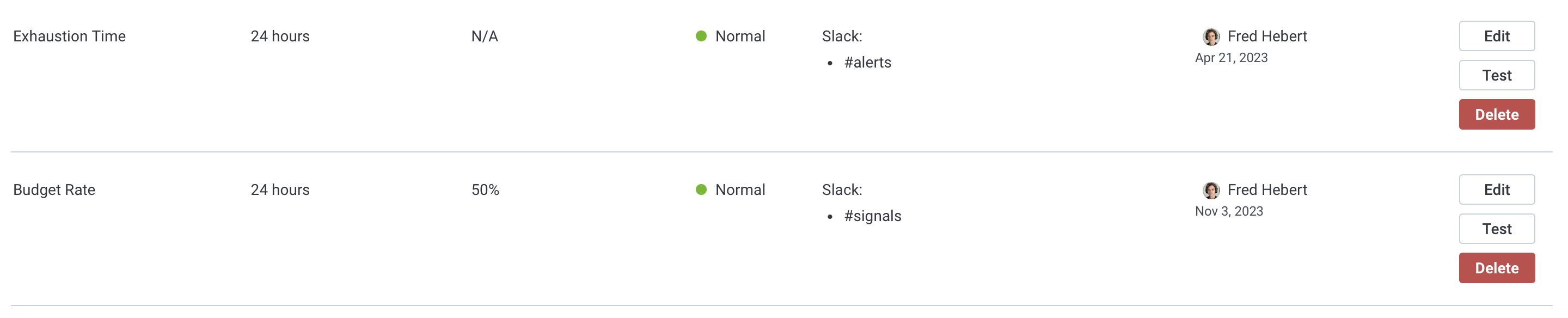

After creation, Burn Alert notification testing becomes available. Use this feature to test if Burn Alert notifications appear as expected before an alert situation occurs.

To test your Burn Alert notifications:

TRIGGERED and RESOLVED message via the configured notification option(s).

Test messages are prefixed with BURN ALERT TEST.

Burn Alerts can be viewed in several locations:

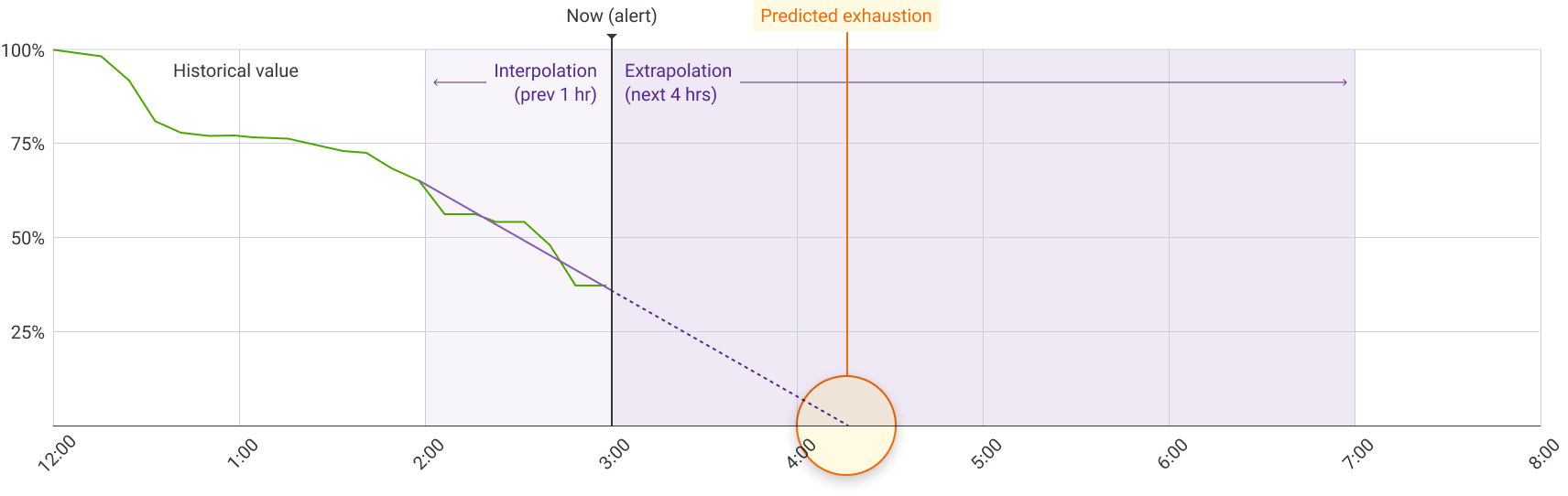

Honeycomb computes whether an Exhaustion Time burn alert may occur by extrapolating the current rate of budget burn.

If this rate reaches zero percent (0%) within the specified number of hours in the alert, then Honeycomb sends a notification.

Honeycomb determines the extrapolation window by dividing the alert’s Exhaustion Time by 4. Honeycomb looks at the past data in the extrapolation window, and then extrapolates what may happen in the future for the specified numbers of Exhaustion Time hours.

An Exhaustion Time burn alert stays activated until the SLO budget will no longer exhaust within the defined exhaustion time. (Honeycomb also applies a small buffer period to avoid fluctuating notification events.) Once resolved, Honeycomb sends a notification.

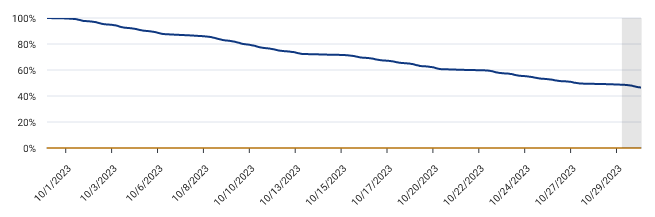

The example below shows how a 4-hour Exhaustion Time alert works. Honeycomb looks at how the last hour has been, which is the extrapolation window, and then extrapolates what may happen in the next four hours, which is the Exhaustion Time value. Based on this data, the four hour estimate will dip below zero, and so the system warns the user.

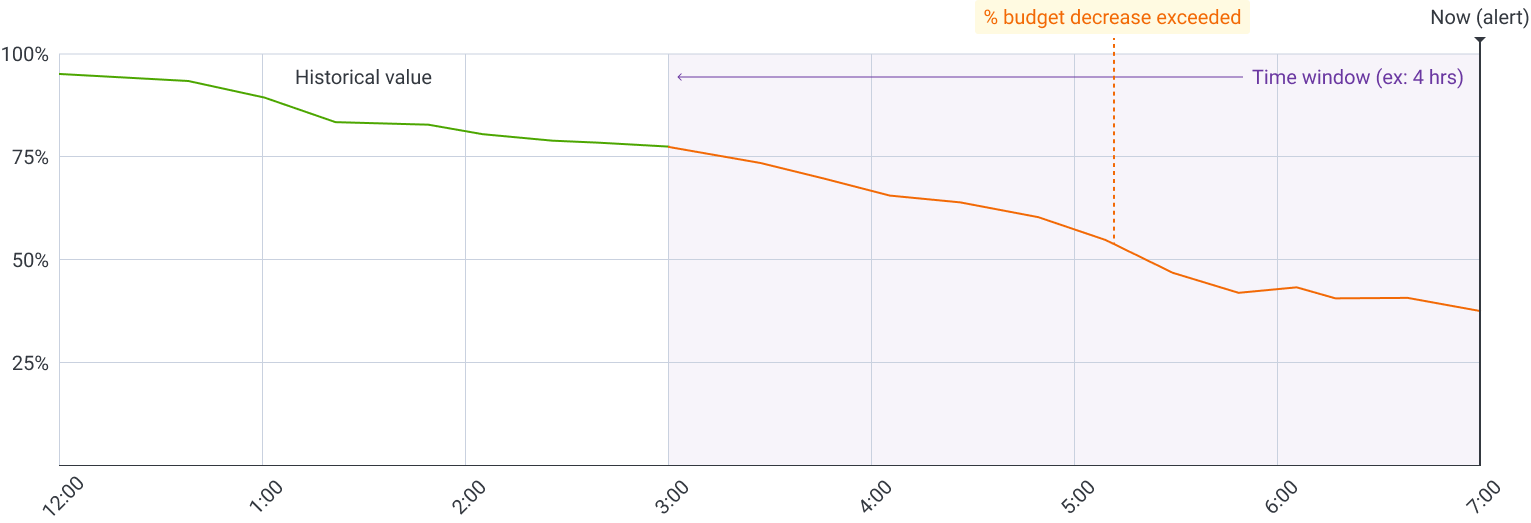

Honeycomb computes whether a Budget Rate burn alert may occur by evaluating historical events in a given time window. A Budget Rate is determined by a drop in budget percentage over a time window. If budget decreases, at minimum, by the configured Budget Decrease value, then Honeycomb sends a notification.

This alert resolves when the consumed budget within the time window is less than the specified Budget Decrease value in the Budget Rate burn alert. (Honeycomb also applies a small buffer to avoid fluctuating notification events.) Once resolved, Honeycomb sends a notification.

The example below shows how Honeycomb evaluates a Budget Rate burn alert with a 4-hour Time Window and 30% Budget Decrease value. The shaded section shows the last four hours for this SLO. Within this range, Honeycomb evaluates the Budget at the start and end of this time window. In this example, the Budget starts at 78% and and ends at 38%, or a 40% overall decrease. Therefore, Honeycomb sends a notification because the Budget Decrease value is 30% and the SLO experienced a 40% overall decrease.

Honeycomb aims to evaluate SLO Burn Alerts every minute. If you configure a Budget Rate burn alert for a 10% Budget Decrease, then an alert notification occurs when the latest evaluation is greater than 10%. Whether evaluated as a 12% or 10.1% decrease, an alert occurs.

If being alerted for a 0.1% over the Budget Decrease value is too sensitive of a measure, increase the Budget Decrease value.

We recommend that you follow certain best practices when creating alerts. Some of these are general guidelines, and some are specific to alert type.

Regardless of the alert type:

When choosing the length of time for a given Budget Exhaustion burn alert, consider the context and goals of your organization. Ask questions to help frame the definition of some initial Exhaustion Time burn alerts. If you are X hours away from running out of budget:

For example, a 24-hour exhaustion time alert can be useful if service quality is slowly degrading and a Slack-based notification allows the team to remediate the issue before the budget reaches zero (0).

Alternatively, a 4-hour exhaustion time alert may be more urgent and require a pager notification, such as from PagerDuty.

0.

This will notify you when your SLO budget is completely exhausted.When starting with a Budget Rate burn alert, consider whether you seek an alert for a smooth, slow burn or a fast, abrupt drop. Start with a less-sensitive alert and adjust as needed. Depending on the length of your SLO’s time period, try these values when creating Budget Rate burn alerts.

Use the following example to create a series of Budget Rate burn alerts for your SLO. Each row represents an alert and its values.

| Budget Decrease (%) | Time Window | Notification Type |

|---|---|---|

| 2% | 1 hour | PagerDuty |

| 5% | 6 hour | PagerDuty |

| 10% | 3 days | Slack |

Use the following example to create a series of Budget Rate burn alerts for your SLO. Each row represents an alert and its values.

| Budget Decrease (%) | Time Window | Notification Type |

|---|---|---|

| 8.5% | 1h | PagerDuty |

| 21.5% | 6h | PagerDuty |

| 43.20% | 3 days | Slack |

| 50% | 3.5 days | Slack |

A long Time Window, such as 24 hours, is useful in detecting long, slow burns that use up your SLO budget faster than expected, but not fast enough to wake someone out of bed. A short Time Window, such as one hour, is useful in detecting very fast SLO budget burns that need to be addressed quickly.

Use the time window to determine the alert method. For example:

Although it may be counterintuitive, a Budget Rate alert with a long time window will also activate on a short, fast burn.

For example, if you have two Budget Rate burn alerts with the parameters:

If your environment encountered a large spike of errors and burned 25% of your SLO budget in the last hour, both the 24-hour Budget Rate burn alert and the 1-hour Budget Rate burn alert will fire, because both include the last hour in their calculation.

You might ask, since both Burn Alerts activated, why do you need both? You need both if you want to control where the Burn Alert notifies.

To control the frequency of your Burn Alert and calibrate its sensitivity:

You can use Burn Alerts in a variety of ways. Some examples include:

Audience: Teams that use SLOs to decide what to prioritize and how to allocate resources in their organization.

Example scenario: Your SLO says that 99% of web requests should complete in less than 250 ms. Any request that takes longer than 250 ms is a failure and burns some of your SLO budget. You need to know how long you have until your SLO budget is exhausted if failures continue at the current rate.

Solution: Set up two Exhaustion Time burn alerts–one alert to represent each alert signal:

Audience: Teams that want more granularity when identifying significant issues that impact their SLO budget. The team wants to know when unexpected spikes are occurring, even if issues are not pageable events, so they can investigate later.

Example scenario: Your SLO says that 99% of web requests should complete in less than 250 ms. Any request that takes longer than 250 ms is a failure and burns some of your SLO budget. You review your SLO and notice that you burned through over 15% of your budget in half a day:

You investigate and determine that the issues that caused the budget burn are worth being notified about.

Solution: Create a Budget Rate burn alert to notify you when your budget decreases by 10% within a 6-hour time window. Because you can deal with this burn during normal business hours, you set the alert to notify staff through Slack, but because you may want to investigate the event later, you also create a ticket.

Audience: Teams that have relatively stable services that burn at a consistent rate, so sudden increase in burn rates would indicate an issue worth investigating.

Example scenario: Your SLO says that 99% of web requests should complete in less than 250 ms. Any request that takes longer than 250 ms is a failure and burns some of your SLO budget. Your services burn at a consistent rate:

You decide that you want to know about any changes to this consistent, steady burn rate, so you can investigate.

Solution: Create a Budget Rate burn alert to notify you when the SLO does not burn as expected. Because you can deal with this burn during normal business hours, you set the alert to notify staff through Slack, but because you may want to investigate the event later, you also create a ticket.

Audience: Teams that want to be sure that issues exhausting their SLO budget are resolved after receiving an Exhaustion Time burn alert. Because Exhaustion Time burn alerts will not alert again until after they resolve, a team may want to track whether a budget burn remains or reoccurs.

Example scenario: You receive an Exhaustion Time burn alert and discover an outage, which you solve. You want to make sure that your solution addressed the actual cause and that you resolved the problem.

Solution: Create a Budget Rate burn alert to use operationally compared to an Exhaustion Time burn alert, which will notify you if your SLO continues to burn at a high rate. Because you need to deal with any continual or recurring burn immediately, you set the alert to notify staff through PagerDuty.

Because an incident can dramatically deplete your SLO budget, an Exhaustion Time burn alert may take a long time to resolve even after addressing the incident and deploying a fix. It takes time for this data to age out and recovery to occur.

This means that an Exhaustion Time burn alert will remain in a fired state after triggering.

If your budget stabilizes, and then starts burning again without ever going back above zero percent (0%), you will not be alerted a second time.

If this scenario is not ideal, you can do one of the following: