Honeycomb SLO allows you to define and monitor Service Level Objectives (SLOs) for your organization. SLOs allow you to define and enforce an agreement between two parties regarding the delivery of a given service. In many cases, SLOs are defined between service providers and customers, but are also useful when used within an organization to clarify agreed-upon priorities for service delivery and feature/bug/feature debt work.

SLOs are not only a technical feature, but also a philosophy of monitoring and managing systems as articulated in the Google SRE book among other sources. Using Honeycomb SLO, you can describe and implement SLOs and be alerted in a timely and appropriate way.

To learn more about guidelines for using SLOs and Triggers for alerting, visit Guidelines for SLOs and Trigger Alerts.

A SLI is a service level indicator. It is a way of expressing, on a per-event level, whether your system is succeeding.

The SLO is the service level objective, which states how often the SLI must succeed over a given time period. An SLO is expressed as a percentage or ratio over a rolling time window, such as “99.9% for any given thirty days.”

The Error Budget is the total number of failures tolerated by your SLO, whether measured by events or by time. For example, if a million events come in over 30 days, then a 99.9% compliance level means you can have 1,000 failed events over those thirty days. Another way to think about error budgets is in terms of time: if traffic is uniform, and there are no brownouts or partial failures, 1% means roughly 7 hours of downtime. Further, 99.9% means 44 minutes of downtime per month.

The Budget Burndown, or the remaining error budget, is the amount of unused error budget in the current time period. Consider again the service that gets a million hits in 30 days, maintaining a 99.9% level over trailing 30 days. If you have seen 550 failing events in the last 30 days, then you have 45% of your budget remaining.

The Burn Rate is how fast you are consuming your error budget relative to your SL0.

For example, a SLO that has a burn rate of 1.0 means that it will consume the error budget at a rate that will leave you with zero (0) budget at the end of the SLO window.

If you consider a 30 day SLO, a consistent burn rate of 1.0 will leave you with zero (0) budget at 30 days. A SLO with a burn rate of 2.0 will leave you with zero (0) budget at 15 days.

You may care about burn rate if you seek to understand the severity of issues in your SLO.

Burn Rate is not the same as an error rate in a SLO. An error rate is the number of errors divided by the total number of events in the last time period. Burn rate is the ratio of the actual error rate to the expected error rate.

Burn rate helps gauge the impact that errors have on your services based on the agreed-upon reliability goals.

If the burn rate is greater than 1.0, it indicates that the service is experiencing more errors than it should according to its SLOs.

A Burn Alert is an alert that signals that the error budget is being burned down rapidly.

In addition to establishing acceptable/desired service levels overall, an SLO provides context that allows different members of the team to make good decisions about things related to the service and its availability, performance, and so on. For example, the on-call team will be able to tell if an error is important enough to get out of bed for, and management will be able to report with precision on just how degraded a service is. SLOs can also provide an agreed-upon set of priorities for the organization to use when making decisions to develop new functionality or fix bugs vs invest in infrastructure upgrades, and so on.

Having SLOs means you can make clear and accurate statements about the impact of both production incidents and development activities on your overall quality of service.

Deciding what to track using SLOs can be made easier if you keep the following tips in mind:

Be as close to the system’s edge as possible, then rely on tools like BubbleUp to pinpoint issues.

Prefer tracking based on user workflows over internal team structures. Make SLOs more relevant to actual user concerns.

Focus on actionable issues worth paging on. Filter out legitimate reasons for a user to see a failure (for example, invalid credentials or user disconnects).

Identify load-dependent measures that remain consistent with increased input.

For example, if you have upload endpoints that may receive large files, making the SLO’s success independent of the payload size will make the SLO more reliable. In this case, consider using transfer speed instead of response time to normalize performance across payload sizes.

Consider more comprehensive, broad SLOs for key performance events. This makes them self-contained and friendlier to use than creating multiple distinct SLOs or filtering various unrelated spans within a single calculated field.

For example, aim for 500 ms response times for “normal” interactive endpoints but allow a few more seconds on authentication endpoints that intentionally slow down when hashing passwords. You can benefit from this approach because the load can still impact your infrastructure and having both types of signal within the same service level indicator (SLI) can uncover weirder interactions.

Consider filtering out specific customers or bad actions (for example, pen-testing) as a temporary solution for better understanding. Use filtering when:

When you structure SLOs, follow these guidelines:

Develop SLOs incrementally, starting with an initial signal and gradually reducing noise for clearer signals.

Ideally, include new code paths for existing features in existing SLOs. If a new path’s volume is so low that 100% downtime would not alert anyone, consider creating a different SLO to track it separately.

In cases where your observability does not align well with detecting both failures and performance issues within a single SLO, monitor effectively by developing separate SLOs–one to monitor failures and one to monitor performance issues.

When determining a target budget and percentage, take an iterative approach.

Whenever possible, organize observability around features rather than code structure. For additional insights, visit our blog post: Data Availability Isn’t Observability.

Document any exceptions or intricacies related to the SLI within the SLO description. Doing so allows you to add more extended comments than on the SLI page itself.

Honeycomb allows you to create an SLO that shares its budget across up to 10 services. The SLI attached to a multi-service SLO must be created as a Calculated Field at the environment level. To understand how to query on these SLIs in Query Builder, see our example of Calculated Fields in multiple datasets.

In most cases, you can define the correct SLO for an experience by putting the SLO on only one service–the service that is closest to your end users, which is also known as the edge service.

Some cases in which you should define an SLO across multiple services include:

| Use Case | Description |

|---|---|

| Your architecture contains multiple edge services | When your users use your service from many locations rather than from one centralized place, you may need multiple services on your SLO. For example, users may interface via service meshes or API Gateways. |

| You are migrating from a legacy monolith to microservices | If you are gradually migrating a part of your system, create SLOs that span both legacy and non-legacy components. |

| You have hot paths for critical flows | If you have a critical flow that goes through multiple key services, create an SLO that spans those key services. |

Use the following guidance to determine whether your use case is possible for SLOs with multiple services.

Honeycomb calculates SLOs as independent buckets of successful versus failed events. As such, you cannot define criteria that references values from multiple events or spans.

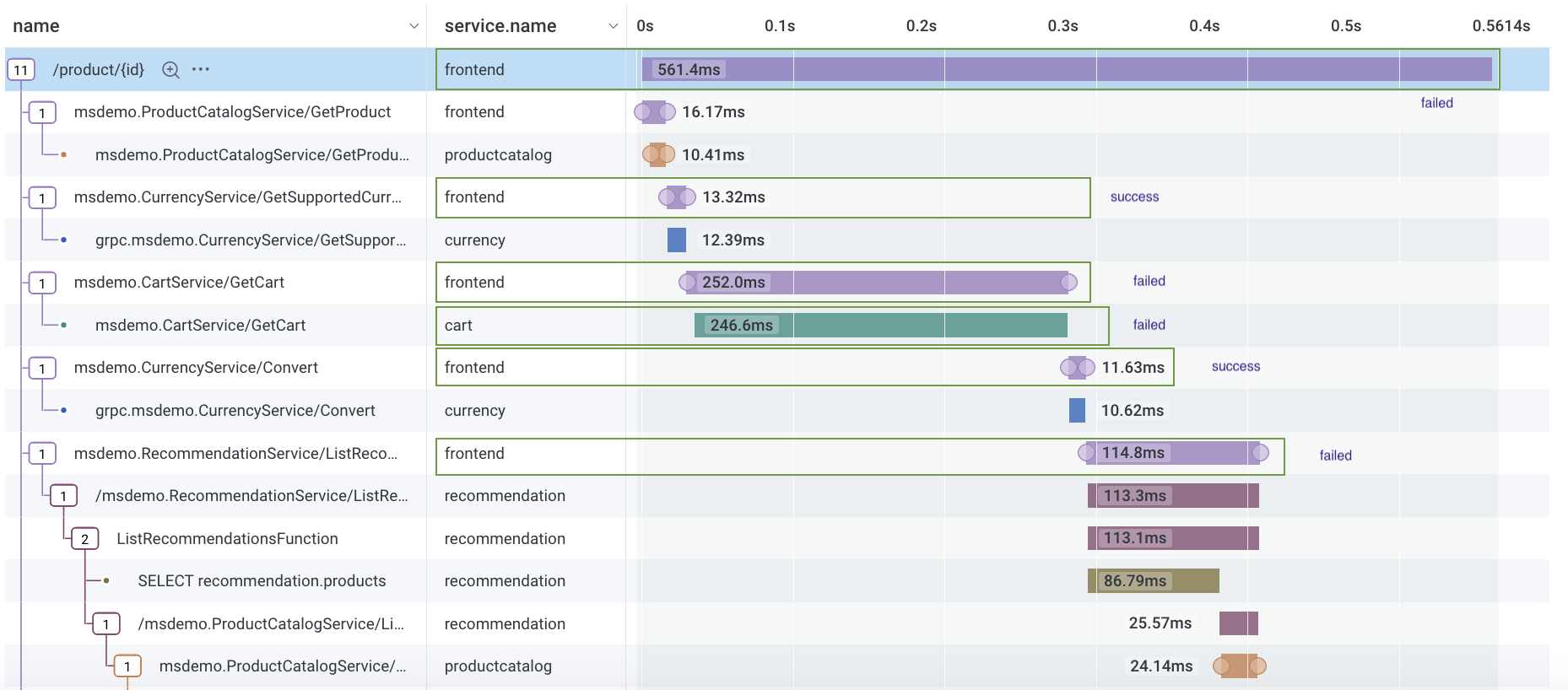

Supported scenario: Your SLO includes both frontend and cart services.

Your SLI defines success as events with duration_ms < 50 ms.

Your events can be categorized as successful or failed, so Honeycomb supports this use case.

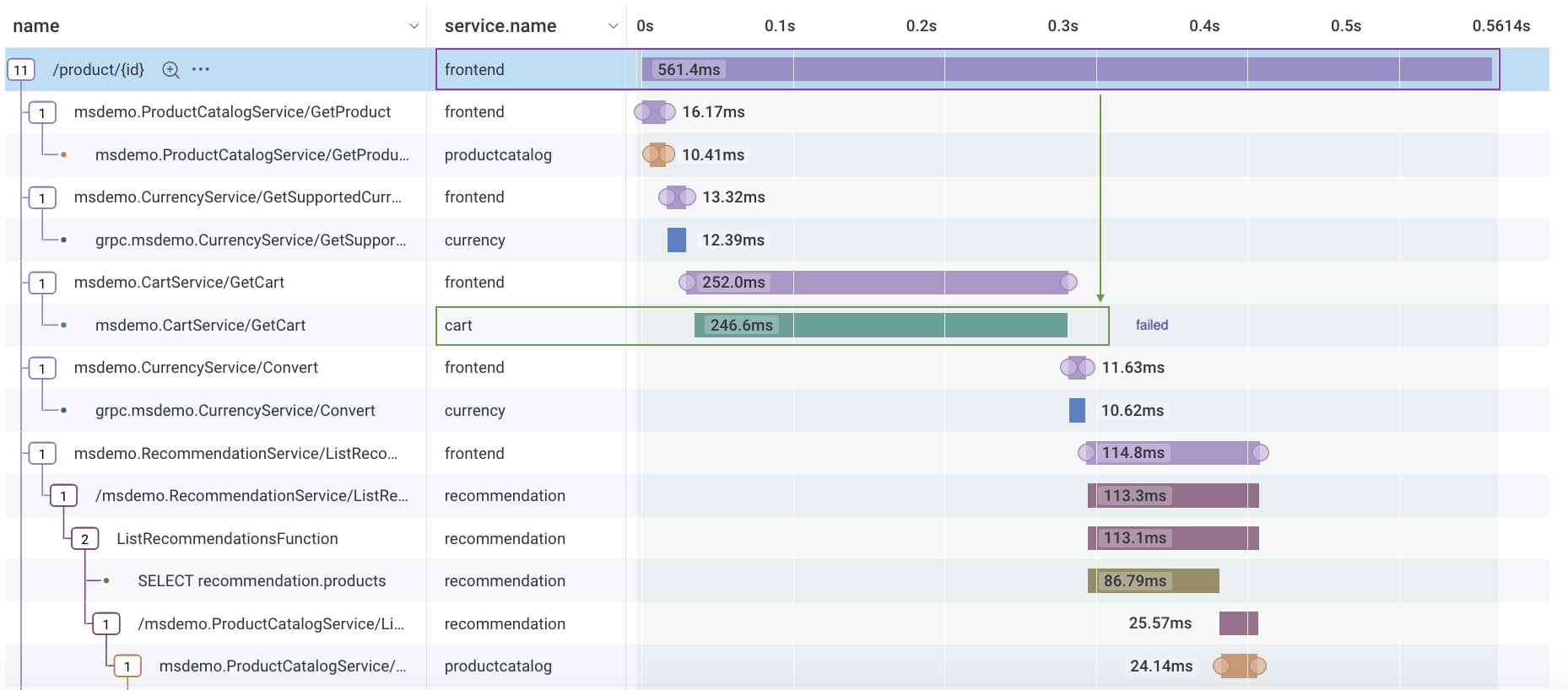

Unsupported scenario: Your SLO includes both frontend and cart services.

Your SLI defines success as events with duration_ms < 50 ms on cart events that are a child span of frontend.

In this case, you would need to combine two events in order to determine success or failure, so Honeycomb does not support this use case.

Honeycomb does not allow you to create an SLO that spans every service in your environment.

Instead, we recommend grouping related SLOs (for example, by team or by product area).

SLOs applied to multiple services count only events from the specified service.

For example, assume your environment contains four services named service_a, service_b, service_c, and service_d.

Assume your SLO includes service_a, service_b, and service_c.

You can expect the following:

Your SLO will ignore all events from service_d.

Your SLO will evaluate the environment-level calculated field (your SLI) against all events in service_a, service_b, and service_c.

Events will not weight differently based on traffic.

For example, if service_a receives 2 events with 1 failure, service_b receives 3 events with 1 failure, and service_c receives 15 events with 2 failures, your SLO will calculate the following SLI: (number of successful events) / (number of total events) = (1 + 2 + 13) / (2 + 3 + 15) = 80%.

You can use BubbleUp with Multi-Service SLOs to determine the differences between your failed and successful events–similar to how you use it on single-service SLOs–but you will see some slight changes:

service.name and service_name), Honeycomb will run an environment-wide query without any filtering on service name.Multiple services on SLOs does not support team activity logs.